The Evolution of AI: Mixture-of-Recursions Architecture

In the rapidly evolving field of Artificial Intelligence (AI), advances in architecture are consistently pushing the boundaries of performance and efficiency. The newest arrival on the scene is a model known as Mixture-of-Recursions (MoR).

This cutting-edge architecture, courtesy of the brilliant minds in the field of AI, promises to drastically reduce Language Model (LLM) inference costs and memory usage without compromising on performance.

Revolutionizing AI with MoR

Simplistically speaking, a recursion is a process where a function, while being executed, calls itself. This strategy of self-reference can save significant memory in computing when dealing with large data sets or complex problems.

The exponential growth of AI application has necessitated groundbreaking methodologies to manage the burgeoning compute and memory demands. Here’s where MoR fills the gap, leveraging the strength of recursions, but boosts the potential with a concoction, a ‘mixture’ if you may, leading to astounding advancements in AI efficiency.

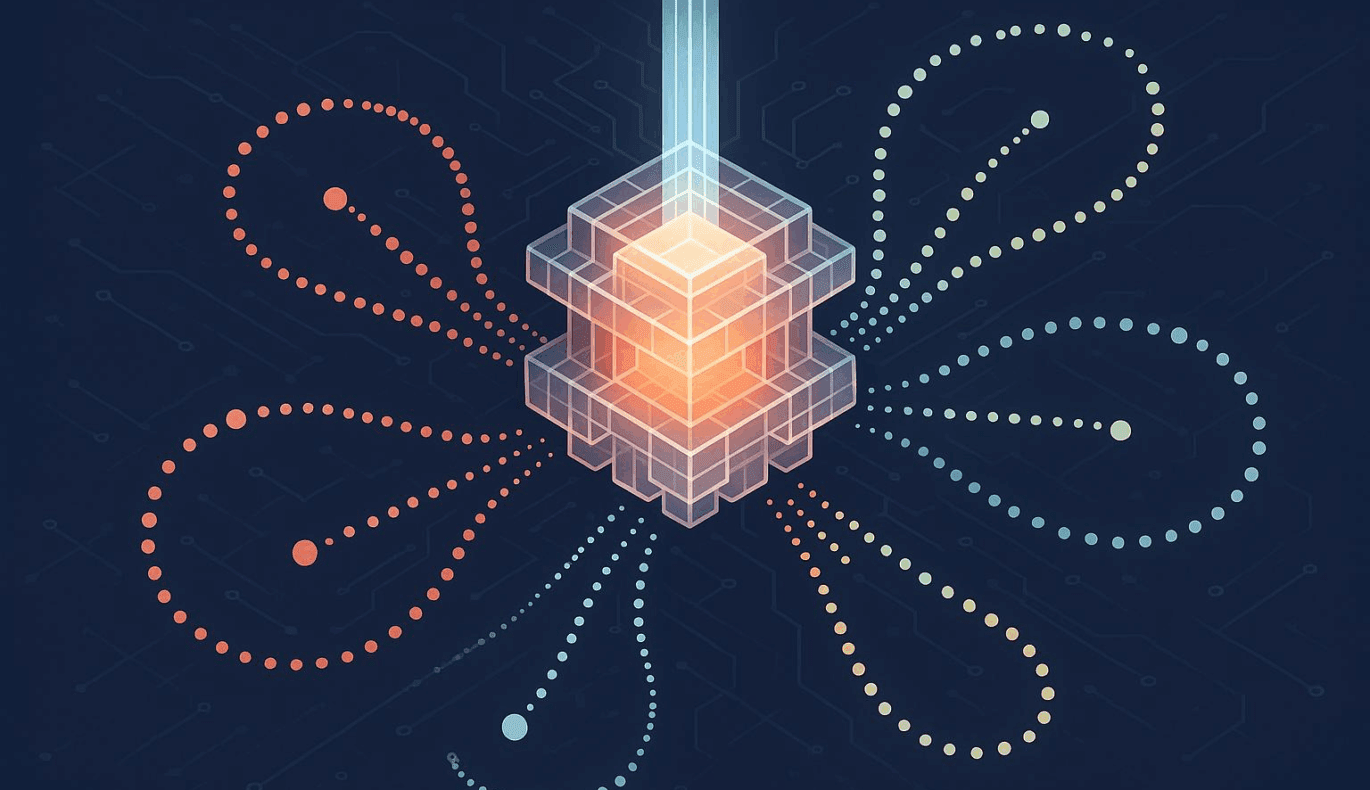

MoR can be visualized as a compelling arrangement of the recursive models. However, unlike conventional recursive models, MoR does not strictly follow the hierarchical division of parent and children nodes. Instead, it allows for a more flexible and efficient alternative that lets sibling nodes interact directly with each other, bypassing the need to traverse up the hierarchy to a shared parent node – an approach that leads to considerable savings in both computational cost and memory use.

The tangible benefits of the MoR architecture cannot be understated. Reductions in LLM inference costs and memory usage equate to tangible savings, both financially and in terms of physical resources. Importantly, these reductions do not come at the expense of performance. Tests show that MoR performs on par, and in some cases, even outperforms traditional recursive models — a win-win situation for all involved.

The promise of MoR is massive, and it holds significant potential to shift the AI landscape as we know it. The ripple effects of such a revolutionary approach can be significant, possibly paving the way for more complex AI models that can drive future innovations.

As AI continues to permeate every facet of our lives, the importance of such developments cannot be overstated. It’s not just about the capability to drive larger, more complex computations; it’s about doing so in a manner that is sustainable, efficient, and able to keep up with the ever-quickening pace of tech advancement.

The Mixture-of-Recursions model is a groundbreaking development that underscores the limitless potential of AI, once again bringing to the forefront the remarkable perseverance and ability that characterizes this realm of technology.

To learn more about the ins and outs of the MoR architecture, head over to the original article on VentureBeat. It offers an extensive dive into the specifics of this exciting new development in the world of AI. You can access the original article here.