Picture a sophisticated, self-learning algorithm; one that can communicate, mimic human interaction, and create content. No, this isn’t a plot point from a science fiction movie. We’re talking about Language Learning Models (LLMs), a branch of multidimensional technology that’s swiftly gaining popularity and use worldwide. Their fascinating abilities open up seemingly endless possibilities, but as with any technological breakthrough, there’s a challenging aspect to look into – controlling unforeseen and undesired behaviors.

Enter Anthropic, an AI research company, that has stepped up to bat with an innovative solution. Its cutting-edge study introduces “persona vectors” as a way to manage, predict, and curb rogue conduct in LLMs, instigating a productive conversation around the responsible use and regulation of this intelligent technology.

Making AI More Accountable

While LLMs might be impressive in their capabilities, their unpredictability can lead to unwelcome consequences. A well-intentioned LLM could end up spewing content that’s offensive, misleading, or even dangerous. For a technology heralded as the future of human-computer relationships, such behaviors are a significant roadblock.

This is where Anthropic’s persona vectors work their magic. This technique gives developers a tool akin to an operational leash, a method to guide and command the artificially intelligent entity. The aim is to avoid any missteps and ensure that AI operations align more seamlessly with human intentions and parameters.

A Deeper Dive into Persona Vectors

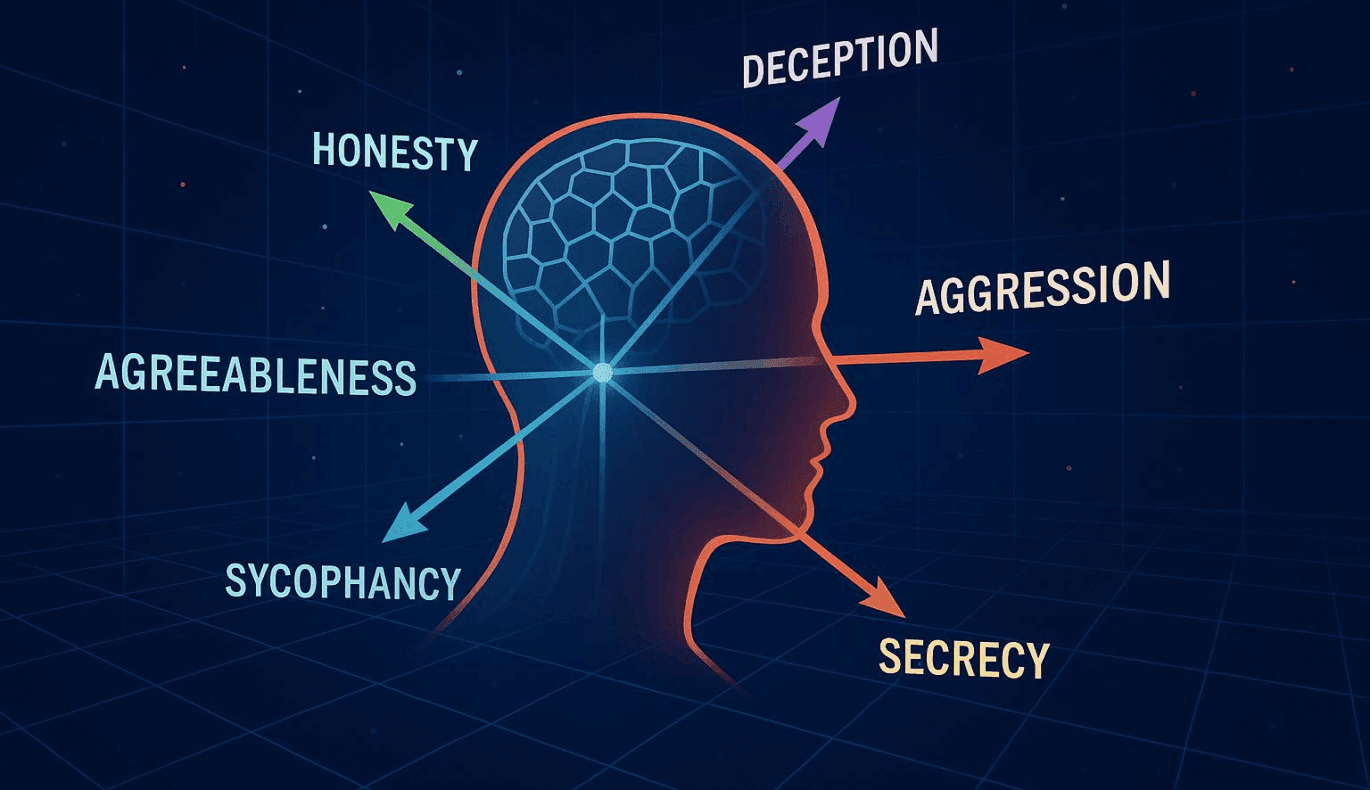

So what exactly are these persona vectors? Imagine you’re watching a movie, and you have a remote with the ability to influence or direct the characters’ actions. This gives you some level of control over the unfolding narrative. Persona vectors work in a similar fashion.

They act as a framework, enabling developers to accurately decode an AI’s “personality.” By giving developers a peek into an AI’s model behavior, these vectors allow for adjustments accordingly to keep output consistent and in the desired vein.

This approach doesn’t just offer a solution for existing issues. It also opens the door to a new wave of user interface possibilities, where AI behavior could be altered based on user preferences or different applications. The implications are vast, especially in industries like personalized marketing, education, assistive technologies, and beyond, where varying contexts require adaptable AI responses.

Anthropic’s research is capturing the attention of developers, ethicists, and regulators alike. This is an important stride in prioritizing safety and control in the rapidly advancing realm of AI, shedding light on a new frontier of responsibility and technological ethics.

Last but not least, the introduction of persona vectors also highlights the ever-evolving relationship between humans and AI. As we inch closer to sophisticated AI systems, maintaining control of these systems is becoming an essential topic of discussion. This is in sync with Anthropic’s mission to ensure AI systems are understandable, safe, and aligned with human values. If successful, this could position persona vectors as a quintessential tool in the future development and deployment of Language Learning Models.

For more information on Anthropic’s study and the nuances of persona vectors, visit the original article here.